July 11 2021

On-Demand Infrastructure: Migrating On-Prem Engineering Tools to Amazon AWS

Retrospect Engineering has always hosted its engineering tools on-premise. For source control, we’ve used CVS, Subversion, and now GitHub Enterprise. For issue tracking, we’ve used Bugzilla. Recently, we needed to move colos, translating into a possible week of downtime for the Engineering team.

I thought it would a great opportunity to try migrating our critical infrastructure to the cloud as a stop-gap solution, so over a weekend, I migrated our on-prem GitHub Enterprise and Bugzilla to Amazon AWS, available through AWS VPN Client. The entire deployment cost $300 for the two weeks we had it active without a day of downtime for the team.

Amazon AWS

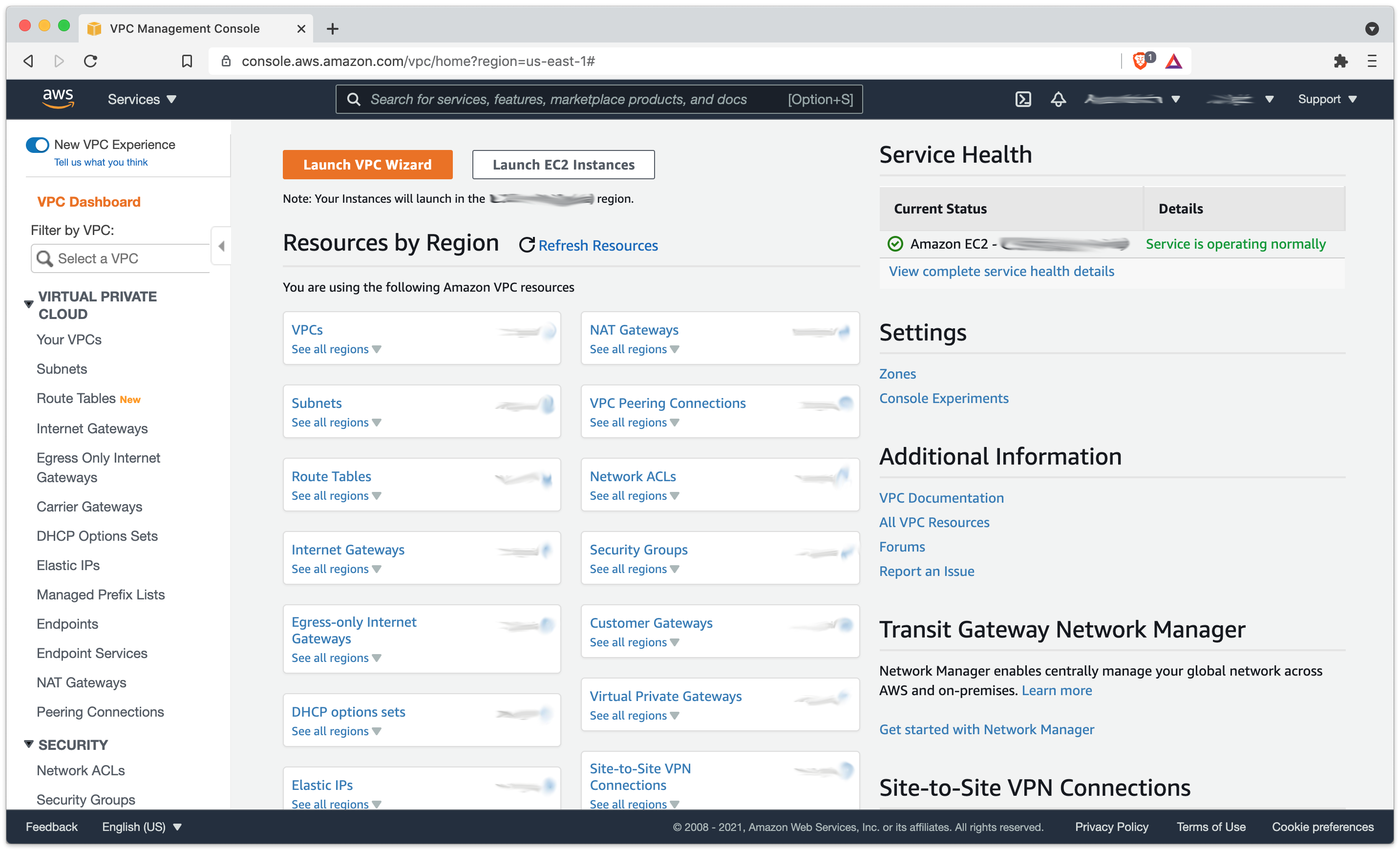

Amazon AWS provides all of the services we needed to set up a private cloud with the necessary topology. The AWS building blocks: VPC, EC2, S3, and Route 53. The AWS Management Console Dashboard provides a single interface to the entire breadth of AWS’s services. Note that some, like S3, provide a global view whereas others, like VPC, only allow per-region selection.

Amazon VPC

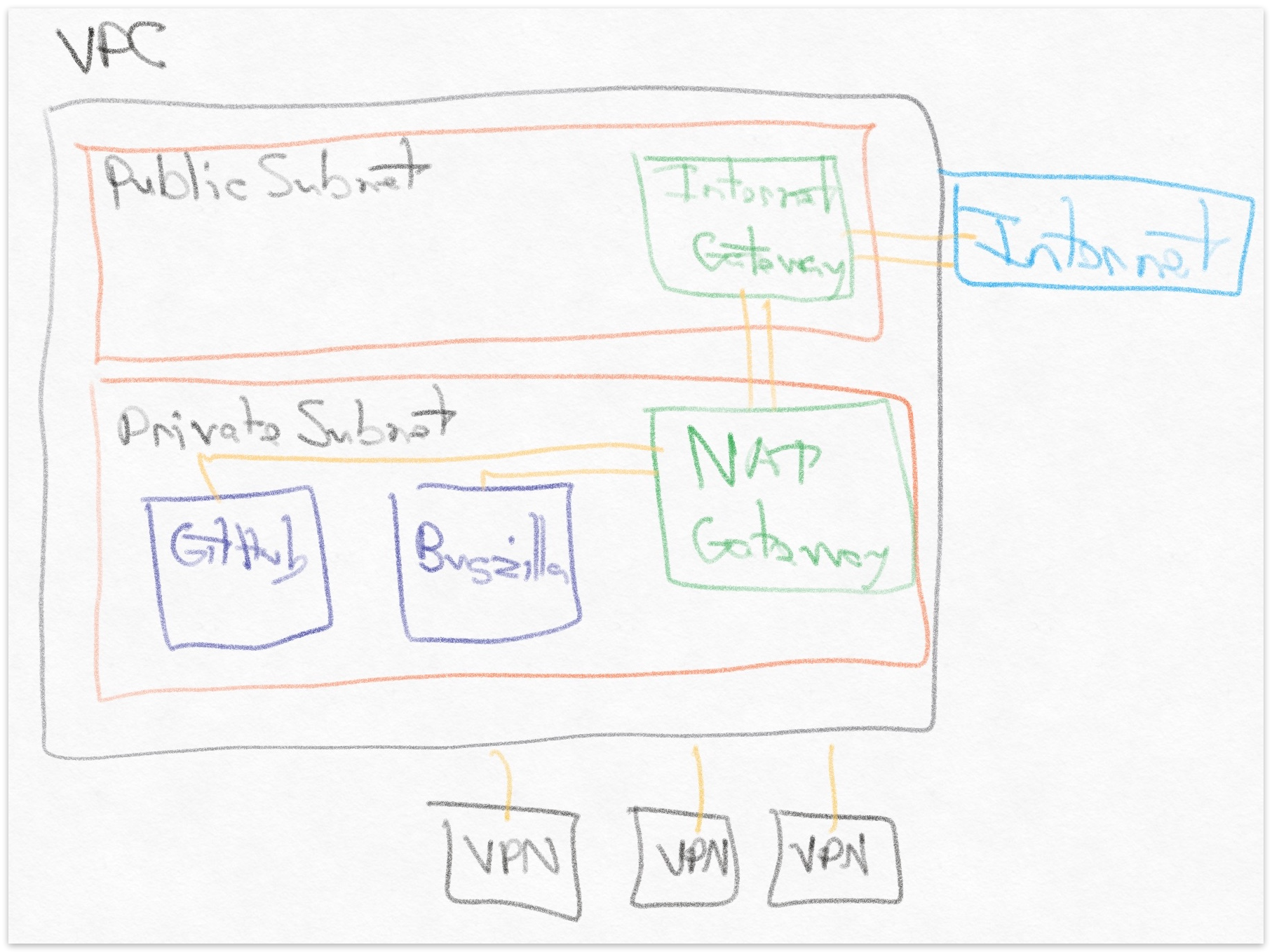

Amazon Virtual Private Cloud (VPC) is the overarching services with the necessary components for building a private cloud.

I used these instructions to set up the following:

- VPC: Private cloud instance with associated CIDR ranges.

- NAT Gateway: Allows private-subnet EC2 instances to connect to public subnet with an Elastic IP (doc)

- Internet Gateway: Allows public-subnet traffic to connect to internet.

- Subnets: Both private and public. EC2 instances on a private subnet within a VPC cannot access the internet without a NAT Gateway on a public subnet and an Internet Gateway on the public subnet.

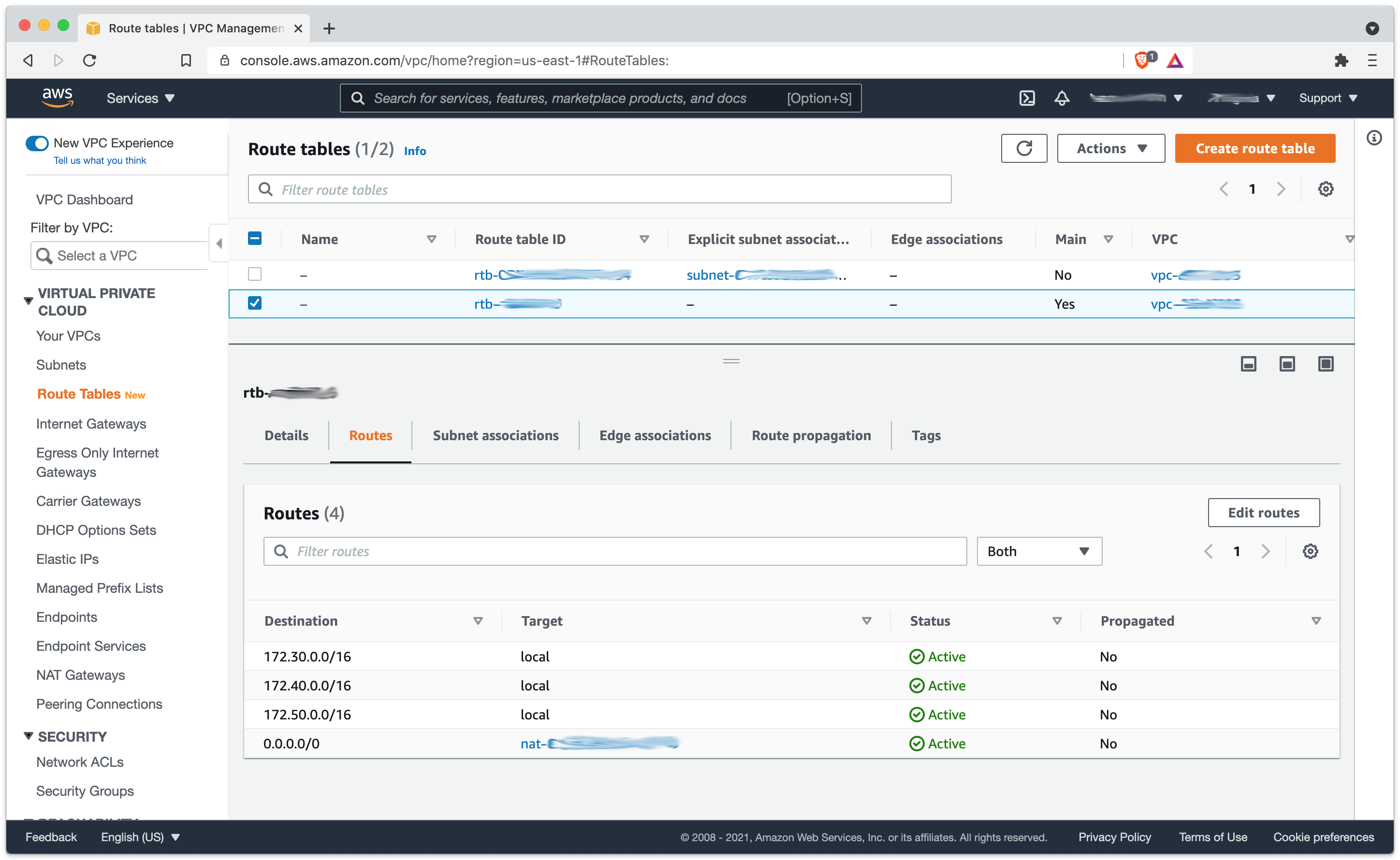

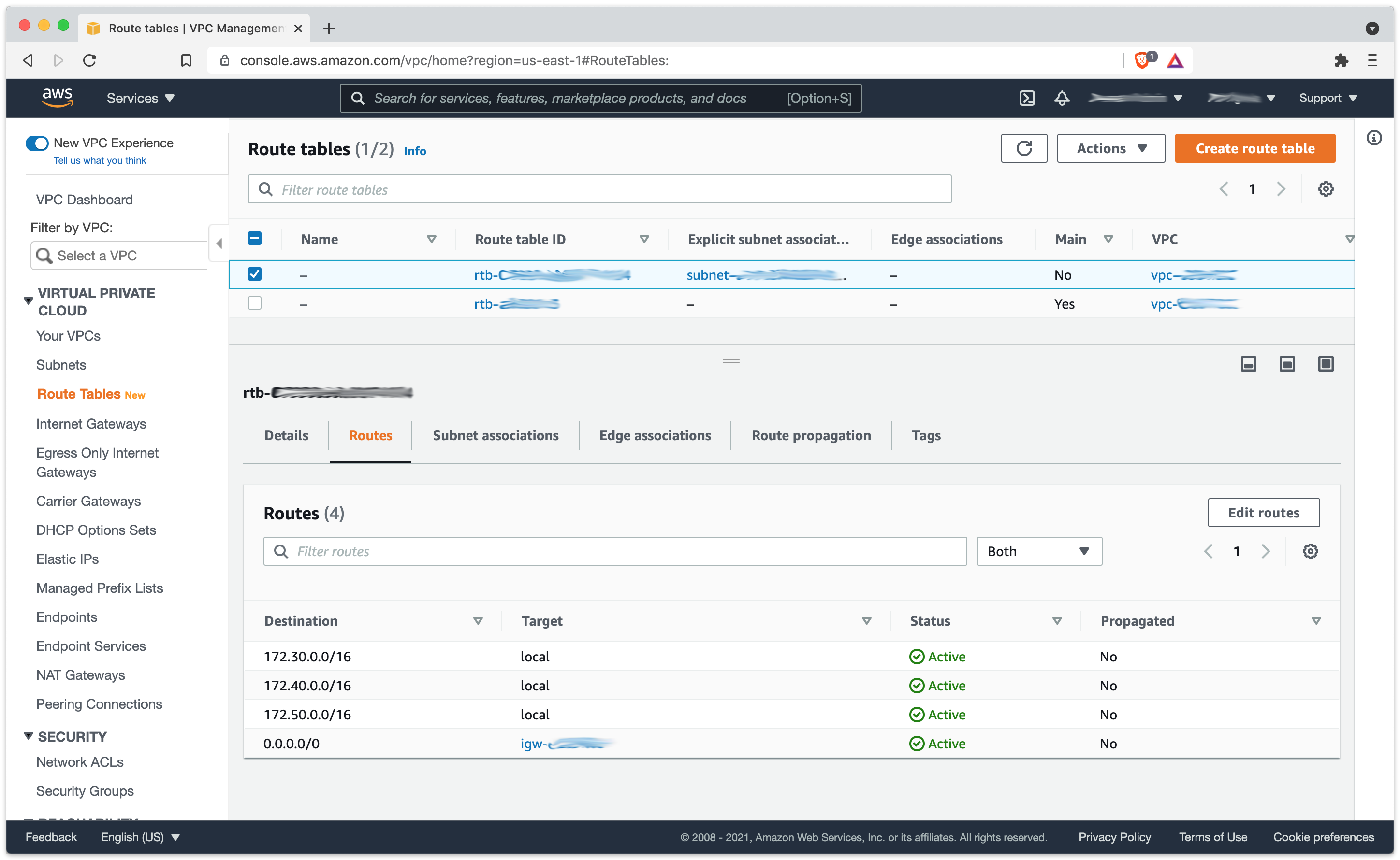

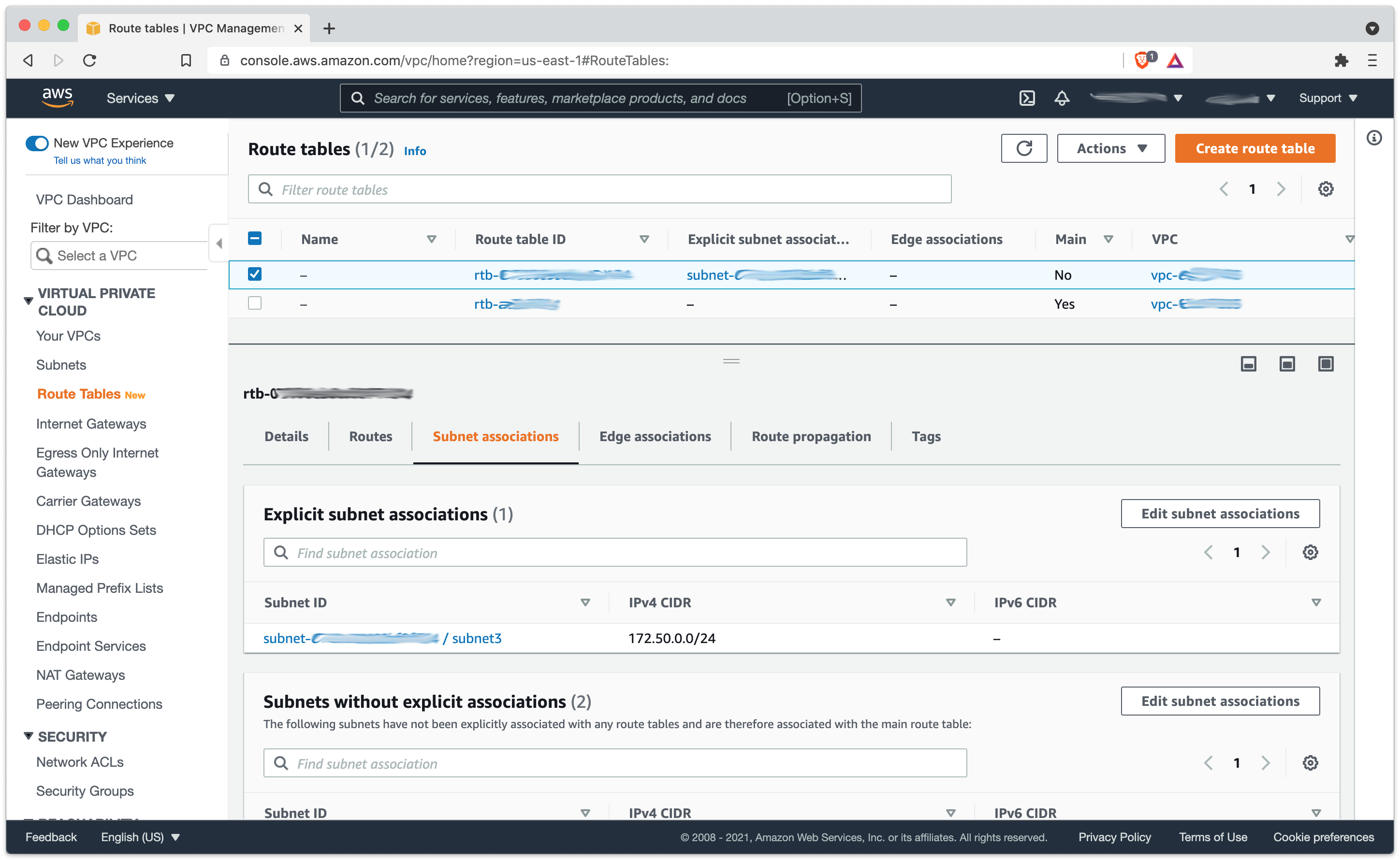

- Route Tables: Associated with subnets to direct traffic.

- Reachability Analyzer: Analyzes whether one component can reach another component. Used this for understanding why an EC2 instance could not connect to the internet.

- Client VPN Endpoint: Uses OpenVPN client to connect endpoint to the private cloud. Followed these instructions. Had to set up new certificate in Amazon Certificate Manager.

Most of the setup was straight-forward, but one setting that took me a while to get right was the subnets with route tables. The private subnet needed to point all traffic to the NAT Gateway on the public subnet, while the public subnet needed to point all traffic to the Internet Gateway. That configuration allowed EC2 instances on the private subnet to connect to the internet.

Amazon EC2

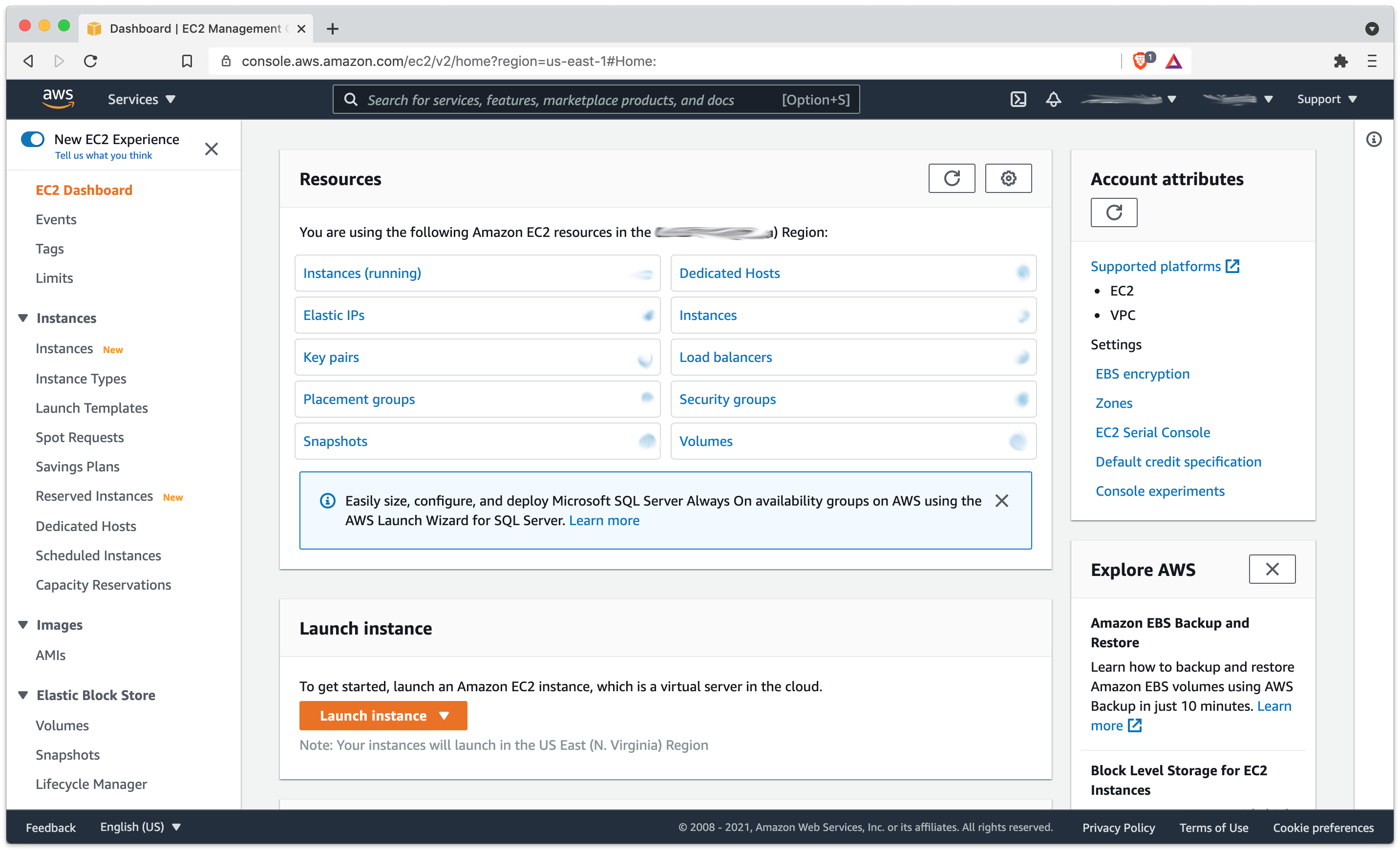

Amazon Elastic Compute Cloud (EC2) runs server instances for Windows, Linux, and macOS, including block storage, security groups, keypairs, and IP addresses.

We’ve been using EC2 for 11 years in various forms, and they now have an overwhelming number of options (396) to choose from for configuration, from general-purpose to compute-optimized to memory-optimized with x86 and ARM support. For our purposes, we used t2 instances: Amazon Linux 2 on t2.medium for Bugzilla and GitHub Enterprise Server on t2.xlarge for GitHub. GitHub provides detailed setup instructions. Let’s walk through the EC2 components:

- EC2 Instances: Runs servers.

- EC2 AMIs: These are server images. We used the standard Amazon Linux 2 for LAMP and GitHub’s provided AMI.

- EBS: Elastic Block Storage. These attach to EC2 instances as block-based volumes. Use these docs to format and mount on Linux.

- Elastic IP: Public-facing IP addresses. These are available both in VPC and EC2.

Amazon S3

Amazon Simple Storage Service (S3) is used to store objects in the cloud. This service was one of the first from AWS; Bezos described it as malloc for the internet.

We used S3 to host the GitHub and Bugzilla backups. We use S3 for everything, with 77 buckets and counting.

Amazon Route 53

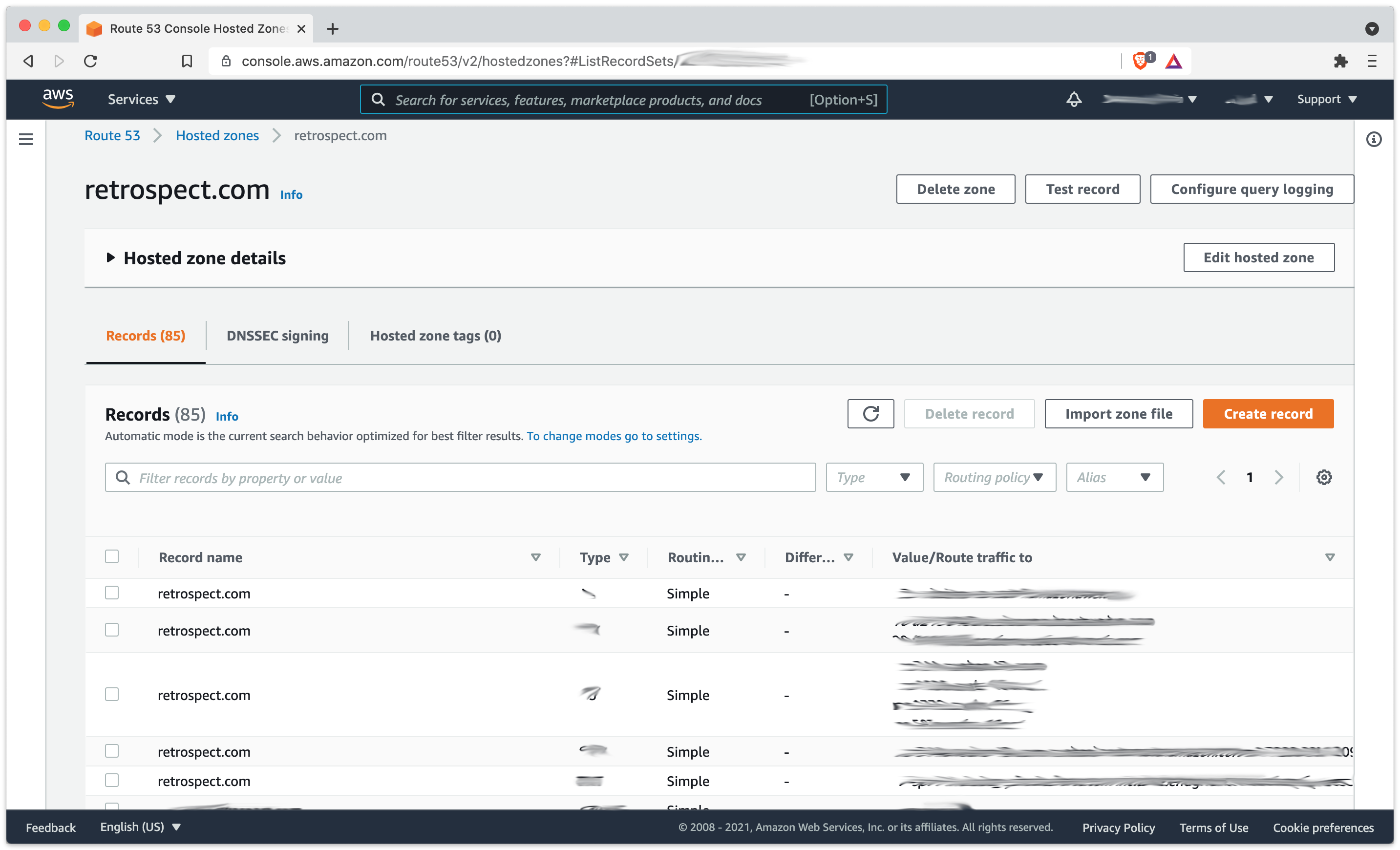

Amazon Route 53 is used for DNS routing.

In addition to handling our website and mail DNS routing, we utilize it for giving private network instances a public DNS name, such as github.retrospect.com. Relying on a public DNS provider makes it simple to maintain and update, and it’s a fast lookup compared to private lookups that we’ve used in the past. We used to use Zerigo for DNS until Zerigo had one too many outages. (Zerigo shut down within a year.) Migrating to Route 53 took about an hour, and the monthly bill went from $13/mo to $3/mo. Finally, abstracting away the IP address allowed us to seamlessly switch from colo to AWS to new colo without changing our Git development settings.

Migration

The actual migration was simple. I took the most recent backups from GitHub and Bugzilla, transferred them into S3, then transferred them into the Bugzilla instance. I used the Bugzilla server as the staging server for GitHub.

GitHub Enterprise has a GitHub repo for backup and restore utilities. After setting up the configuration file, we do backups with:

ghe-backup

tar zcvf github.tgz ./backup-utils

And you can do a restore with:

tar xvf github.tgz

ghe-restore github.retrospect.com

For Bugzilla, we simply tar/gzip the directory and dump the MySQL database:

tar zcvf bugzilla.tgz /var/www/html/bugzilla

mysqldump -u root -p bugzilla | gzip > bugzilla.sql.gz

We restore with:

tar xvf bugzilla.tgz

gunzip < bugzilla.sql.gz | mysql -u root -p bugzilla

One issue was GitHub’s compression. I had been using a spare Mac VM to do GitHub backups. When I compressed it to transfer to S3, I used macOS’s Archive Utility from the right-click menu. As it turns out, gzip didn’t know what to make of that. I had to tar/gzip it again and transfer it to get it uncompressed on the EC2 instance. Another important note is not using “-h” for tar because it follows symlinks rather than preserving them, doubling the size of the GitHub backup due to the “current” symlink.

Another issue was Bugzilla’s backup. A recent attachment broke the mysqldump export of the MySQL database. I had to delete the attachment from the MySQL attach_data table to get a working export. It was 2GB, going back 10 years.

The final issue was setting up Bugzilla itself. Installing the correct Perl CPAN modules is a pain, even with yum as a tool.

Migrating back from AWS to the new colo was just as easy, using the same steps in reverse.

Beyond AWS

There are three major cloud providers: Amazon AWS (at 33% market share), Microsoft Azure (at 15% market share), and Google Cloud Storage (at 5% market share). I used Amazon AWS because I already have Amazon AWS. Let’s look at the services offered by the other two platforms:

For Microsoft Azure:

- Virtual Network for private cloud

- Virtual Machines for servers

- Blob Storage for object storage

- Azure DNS for DNS entries

- VPN Gateway for VPN service for endpoints into the private cloud. See “Remote work using Azure VPN Gateway Point-to-site”.

For Google Cloud:

- VPC for private cloud

- Compute Engine for servers

- Cloud Storage for object storage

- Cloud DNS for DNS entries

- Cloud VPN for peer-to-peer network connectivity, but GCP does not offer an equivalent to AWS Client VPN Endpoint. There is a feature request for it.

On-Demand Infrastructure

Cloud is on-demand infrastructure. It took me a weekend–from googling “AWS VPN” to sending out VPN profiles to the team–to get the temporary environment set up. By migrating our source control and issue tracking database to Amazon AWS, the Engineering team didn’t miss a day of work while our servers were on a truck. The IT team at the other end set the environment up quickly once the hardware arrived, but we would have missed seven days of work without the AWS infrastructure.